Measuring Up?

PREAL publication analyzes how Latin American and Caribbean countries performed on PISA tests.

This post is also available in: Spanish

In April 2014, four months after PISA 2012 (Program for International Student Assessments) test results were released, the Inter-American Dialogue published an article summarizing different actors’ reactions to Latin American and Caribbean (LAC) countries’ results. All eight participating LAC countries scored in the lowest third in reading, mathematics and science, so it came as no surprise that the results elicited strong reactions by actors from different sectors.

On December 6, 2016, the PISA 2015 test results were released, and again prompted immediate reactions. Similar to before, participating LAC countries (this time 10 countries) scored in the bottom 50 percentile across all three subjects, but some countries showed relative improvements. How did LAC’s media, civil society and governments react to the results?

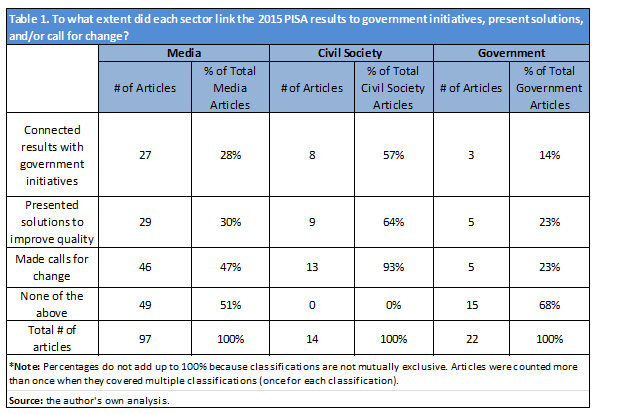

After reviewing 133 different online sources from December 6, 2016, to January 23, 2017, from the ten LAC countries included in the PISA examination, we reached several conclusions.

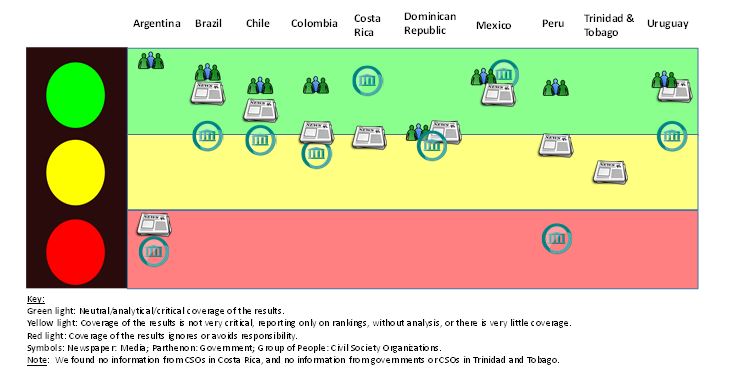

Half of the participating LAC countries were critical of their results but acknowledged the improvement in their scores. For instance, Colombian and Peruvian articles generally conveyed a sense of pride in the improved results, while recognizing that more still needs to be done to reach OECD result averages. In El País, Colombia’s President recognized that, despite being below OECD averages, the country was one of three that performed better in all three tested areas. In Peru, in an interview with TV Peru 7.3, senior researcher and public policy advisor Hugo Ñopo pointed out that if Peru improves its education at this rate (as 2015), it will take 21 years for Peru to reach OECD’s average results. However, he also said, “The fact that our country, which is more heterogeneous and complicated [than other countries that scored better], managed to achieve these results is remarkable.”

By contrast, countries from the Southern Cone, despite being ranked top in the region, generally produced articles that were critical of plateaus or small marginal increases in scores. For example, in El Pulso, after recognizing Chile’s good scores, the Executive Director of the nonprofit foundation Acción Educar said: “The bad news is that Chile has not improved and we remain at a distinct distance from OECD countries and countries with GDPs similar to ours.” In Uruguay, an article in El Observador discussed an Inter-American Development Bank’s (IDB) analysis of PISA results. The article mentioned that, based on the 2012 scores, the IDB had predicted it would take Uruguay at least 20 years to reach OECD averages, but that given Uruguay’s minimal improvements in 2015, the IDB now considered Uruguay’s possibility of reaching OECD averages “unattainable.”

Half of the participating LAC countries made direct connections between the 2015 test results and government programs in their articles. For example, Colombia’s Minister of Education gave credit to Todos a Aprender and Colombia Bilingüe, among other programs, for the improvement in quality education across the country. In the Dominican Republic, which administered the test for the first time, the government issued a statement that recognized the country’s low results and mentioned that government programs will be used to work towards higher quality education and improved scores. Programs included: Jornada Escolar de Tanda Extendida, which extends the school day; and the Pluriannual Plan 2013-2016, which is designed to provide quality education to students with disabilities.

Across the 133 reviewed sources, there is general consensus that the greatest obstacles to high educational performance in the region concern unfavorable social contexts, low-quality teaching and the inefficient use of financial resources. For unfavorable social contexts, sources highlighted gender, socio-economic and urban-rural inequalities. For teaching, sources identified low teacher wages and qualifications and the lack of interest in teaching as a profession. Sources also agreed that public education resources need to be used more efficiently. Grade repetition and student dropout rates were also discussed as issues that impact quality education. If the foregoing deficiencies are remedied, many articles suggested that schools could be transformed into spaces for academic and personal growth.

Civil Society Organizations (CSOs) – Telling It Like It Is

CSOs were the most objective, action-oriented and analytical about PISA 2015 results. For example, in Brazil, Nova Escola identified which types of questions were more difficult for students to answer, explored the role and influence of teachers on education quality, and disaggregated test results by types of school (federal, private, state, and municipal). In Mexico, Mexicanos Primero not only discussed the test results, but also used general conclusions from PISA results and other nationally-conducted assessments to propose policy recommendations and made references to the Mexican Constitution to help support the organization’s proposed actions. Perhaps the best analysis of Argentina’s results was by the Universidad Pedagógica’s Observatorio Educativo, which both reported results and included critiques of how the PISA exams were structured and conducted.

Media – All Over the Place

Some media articles were light on analysis, often merely reporting results. Nevertheless, they served as “first steps” for drawing regional comparisons and provided a solid foundation of PISA’s purpose and utility. For example, an article from Costa Rican newspaper La Prensa Libre primarily consisted of rankings at the regional and global level, basic information about PISA, and sometimes included quotes by OECD’s Secretary General.

By contrast, other media articles provided in-depth articles that included interviews with government officials and experts to provide key country-specific and regional insights. They explored the impact of gender and socio-economic gaps in results, as well as differences in public expenditure on education, effects of migration patterns, and criticisms of PISA. For example, some Peruvian sources included interviews with Hugo Ñopo, senior researcher for GRADE (a nonprofit and nonpolitical research center for public policy) and an advisor for the Peruvian Ministry of Labor and Social Promotion, which gave additional weight to the article’s conclusions.

Government – Citing Successful Programs, or Surprisingly Silent

Governments with historically lower test scores openly admitted the need for continued progress, but also stressed accomplishments already achieved through their programs and advanced recommendations for further improvements. Mexico, for example, recommended the use of Servicio de Asesoría Técnica Escolar (School Technical Advisory Service) to ensure that teacher evaluations are taken into account. Surprisingly, Trinidad and Tobago did not release formal government publications reacting to PISA 2015 results (even though the media reported and analyzed the results).

Untangling the Absence of Results from Argentina

Argentina performed national examinations, but something went wrong. After many arguments among stakeholders, the OECD decided against publishing Argentina’s results because they could not be compared, statistically, to previous PISA results due to a change in sample size. However, the city of Buenos Aires (CABA) conducted its own PISA tests, which the OECD accepted and included in the rankings. CABA performed strongly and ranked the highest in the region for all three tested subjects. This begs the question of whether CABA’s results can be meaningfully compared with those of other nations.

As a first-time PISA test-taker, were the Dominican Republic’s expectations too low?

All sources from the Dominican Republic acknowledged that the country recorded the worst scores in the region. Fourteen articles emphasized that this was the country’s first time participating in PISA examinations and that its score should be judged as a baseline. Fifteen articles stressed that although the Dominican Republic ranked last in the region, it did not rank last in comparison to all of countries that participated in PISA 2015 exams. Seven articles did not make that distinction and claimed that the country had performed last in the world. By comparison, twelve articles emphasized the Dominican Republic’s prospects for improvement.

As a second-time PISA test-taker, was Trinidad and Tobago’s media insufficiently critical?

Trinidad and Tobago first implemented the PISA test in 2009. Between the 2009 and 2015 test, the country improved notably in science and reading, with an increase by 15 and 11 points, respectively. Trinidad and Tobago’s media actively responded to the country’s improved PISA 2015 results, recognizing the country’s comparatively high ranking in the region. However, a few of the sources went further, stating that Trinidad and Tobago had the highest results in the Caribbean. While true, this is misleading in that only two Caribbean countries participated in the 2015 PISA examinations.

Concluding Remarks

In conclusion, there were clearly differences in how LAC sectors covered PISA results. Media sources often varied between mere reporting of results and providing analytical coverage. Governments attributed increased scores to their programs or depended on other sectors to cover the results. Finally, CSOs remained the most objective and informative across the region.

Across all sectors, reactions demonstrated a common interest in LAC’s quality of education and the utility of PISA as an evaluation tool. The cross-sectorial reactions showed a consensus on the persisting issues and potential solutions.

Research for this report is based on publicly available information about the 10 LAC countries that conducted PISA exams in 2015. We searched three sectors for each country, using key words in Spanish (PISA resultados 2015 + país). We reviewed and analyzed results found in the first two pages of Google searches.

For media, we searched for coverage about PISA directly on the webpages of prominent newspapers of each country. For information from government sources, we conducted the research with two of the same keywords (PISA resultados 2015 + país) and added “Ministerio de Educación.” In some cases, we researched directly on the webpage of the country’s Ministry of Education. To find CSO reactions to the PISA results, we identified and searched CSOs that work in education and that were mentioned in government and media search results.

We used the following questions to categorize the content about PISA articles: Do they compare the 2015 results with previous PISA results? Do they compare results with other countries? Are they linking results or information with country policies or programs? What is the overall tone of the coverage? Do the articles provide analysis and propose solutions or calls for action?

Image Credit: Eric E. Castro / Flickr / CC by 2.0.

PREAL publication analyzes how Latin American and Caribbean countries performed on PISA tests.

Results of PISA show China receives highest scores.

What is the real value from the PISA 2009 Plus results?